by Melanie Klein, Beth Jarosz, and Chris Dick

The dataindex.us team is excited to launch the Data Checkup – a comprehensive framework for assessing the health of federal data collections, highlighting key dimensions of risk and presenting a clear status of data well-being.

When we started dataindex.us, one of our earliest tools was a URL tracker: a simple way to monitor whether a webpage or data download link was up or down. In early 2025, that kind of monitoring became urgent as thousands of federal webpages and datasets went dark.

As many of those pages came back online, often changed from their original form, we realized URL tracking wasn’t sufficient. Threats to federal data are coming from multiple directions, including loss of capacity, reduced funding, targeted removal of variables, and the termination of datasets that don’t align with administration priorities.

The more important question became: how do we assess the risk that a dataset might disappear, change, or degrade in the future? We needed a way to evaluate the health of a federal dataset that was broad enough to apply across many types of data, yet specific enough to capture the different ways datasets can be put at risk. That led us to develop the Data Checkup.

Once we had an initial concept, we brought together experts from across the data ecosystem to get feedback on that concept. The current Data Checkup framework reflects the feedback received from more than 30 colleagues.

The result is a framework built around six dimensions:

Historical Data Availability

Future Data Availability

Data Quality

Statutory Context

Staffing and Funding

Policy

Each dimension is assessed and assigned a status that communicates its level of risk:

Gone

High Risk

Moderate Risk

No Known Issue

Together, this assessment provides a more complete picture of dataset health than availability checks alone.

The Data Checkup is designed to serve the needs of both data users and data advocates. It supports a wide range of use cases, including academic research, policy decision-making, journalism, advocacy, and litigation.

Different users will have different tolerances for data risk. A researcher may be most concerned about changes to methodology, while a litigator may be most concerned that a statutory publication deadline was missed. With multiple dimensions of risks assessed, the Data Checkup provides users the flexibility to focus on the risks most relevant to their work.

By surfacing risks early, before data is lost, degraded, or unusable, the Data Checkup helps data users and advocates make informed decisions about using, maintaining, and protecting the federal datasets they rely on.

The Data Checkup Framework

Let’s take a closer look at the Data Checkup framework, exploring each dimension and the levels of risk they can be assigned.

Historical Data Availability

Assessment of the availability of existing (historical) data and resources associated with the data collection.

Gone | Historical data files are no longer publicly available. |

High Risk | Some historical data files are removed. |

Moderate Risk | Some historical data elements are removed. |

No Known Issue | Historical data remain accessible with no known alterations. |

Future Data Availability

Assessment of whether continued data collection and publication are likely and on schedule.

Gone | Data collection and publication has been terminated. |

High Risk | Statutory publication deadline missed and/or collection/publication skipped and/or ICR expired for more than one year. |

Moderate Risk | Typical or intended publication date missed and/or collection/publication delayed; ICR expired up to one year. |

No Known Issue | Data published on time or as expected and ICR active or renewed before expiration. |

Data Quality

Assessment of whether continued data collection is of similar quality to historical data.

Gone | Data collection and publication has been terminated. |

High Risk | Reductions in granularity, timeliness, or frequency. |

Moderate Risk | Potential or emerging risk to granularity, timeliness, or frequency. |

No Known Issue | Maintained or improved granularity, timeliness, or frequency. |

Statutory Context

Assessment of the strength of statutory requirements and authorization, as well as program reliance on continued data collection and publication.

High Risk | Statutory authorization is vague and/or there are alternative data collections that could serve as substitutes and/or no known programmatic use. |

Moderate Risk | Not explicitly required by statute but required for the implementation of a state or federal program and there are no alternative data collections that could serve as substitutes. |

No Known Issue | Statutorily required and/or statutory authorization is explicitly named and is clear what has to be collected and there aren't alternative data collections that could serve as substitutes and/or required for implementation of a federal program. |

Staffing and Funding

Assessment of whether the agency has sufficient staff, budget, and leadership stability to sustain data collection and publication.

Gone | All of the staff in the division or agency are gone and/or all funding has been terminated. |

High Risk | 40% or more of staff lost, and/or 1,000 or more staff lost, and/or budget cut by 20% or more, and/or leadership removed. |

Moderate Risk | 10-39% of staff lost, and/or and/or 500-999 staff lost, and/or budget cut by 10-19%, and/or threatened change in leadership. |

No Known Issue | Less than 10% of staff lost and less than 10% of budget cut and no known change in leadership. |

Policy

Assessment of whether specific policy actions have been or are being taken to alter or discontinue data collection and publication.

Gone | Data collection and publication has been terminated. |

High Risk | Presidential Action-driven ICR; negative policy note on site; other significant changes in accordance with Administration priorities. |

Moderate Risk | Proposed or pending changes; statements by administration officials suggesting a change is being considered or planned. |

No Known Issue | No notable changes since January 2025 affecting what data is collected and published. |

Putting the Framework into Practice

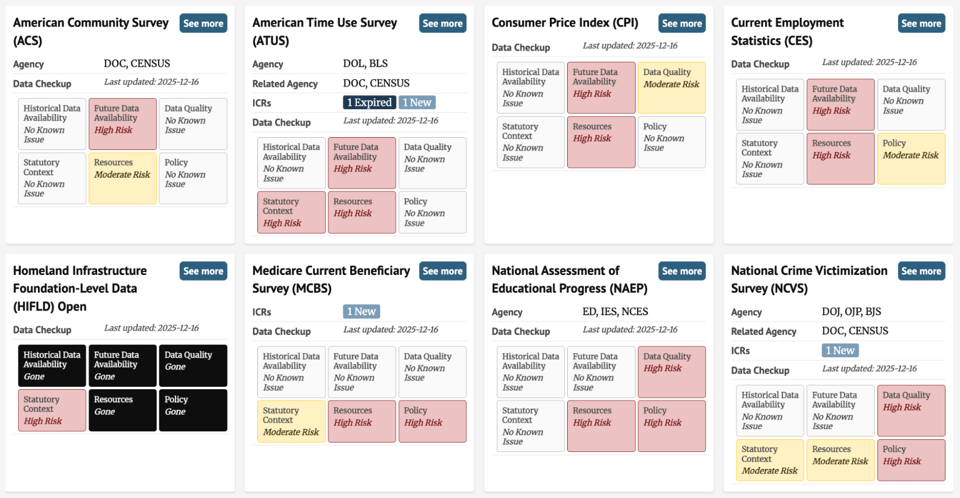

Here you can see the Data Checkup framework applied to a subset of datasets. At a high level, it provides a snapshot of dataset wellbeing, allowing you to quickly identify which datasets are facing risks.

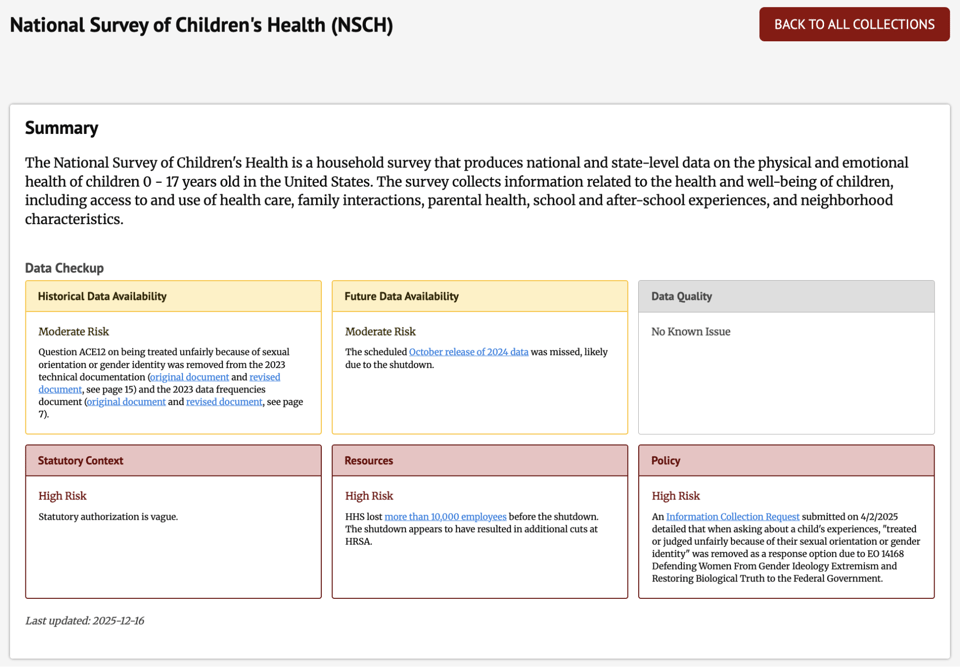

Zooming in on a specific dataset reveals additional information, including citations and explanations for why each dimension was assigned its risk level. This detailed view helps users understand the reasoning behind the assessment.

What’s Next for the Data Checkup

This launch marks the first version of the Data Checkup. We expect the framework to go through multiple iterations as the risk landscape evolves over time.

As the tool evolves, new assessments will be published, the coverage of monitored datasets will expand, and the assessment will be automated (where possible). Data Checkups will be updated quarterly, with additional ad hoc updates in response to major developments.

We welcome your feedback. If there is a dataset you would like to see monitored, or if you have expertise on the health of a dataset, we’d love to hear from you.

Together, we hope the Data Checkup serves as a shared resource for monitoring the health of federal datasets.